Day 26: Is This Real?

Recognizing Deepfakes – “Is This Really Real?

Ever wonder if that “celebrity cooking a gourmet meal” video you saw was real or just some digital magic? Well, you’re not alone! Today, we’re diving into deepfakes—a fun tech word for a not-so-fun security risk. Because, hey, it’s 2024, and it seems like every day, the internet throws us new surprises, right?

source: Artificial Intelligence

source: Artificial Intelligence

What Are Deepfakes?

Imagine a tool that uses artificial intelligence to produce realistic-looking spoof images or videos. Deepfakes combine voices, photos, and videos using artificial intelligence to give the impression that someone said or did something they didn't. Deepfakes began as artistic entertainment, but they are now being used increasingly to disseminate false information, and they can be harmful if we don't know how to recognize them.

Why Do Deepfakes Matter?

Deepfakes can spread rumours and misinformation, harm reputations, and make it hard to trust what we see online. Imagine seeing a video of a politician making outrageous statements—only to learn it was fake! That’s the tricky part: deepfakes are designed to look natural, which can impact public trust and personal security.

source: SoSafe

source: SoSafe

How Can You Spot Deepfakes?

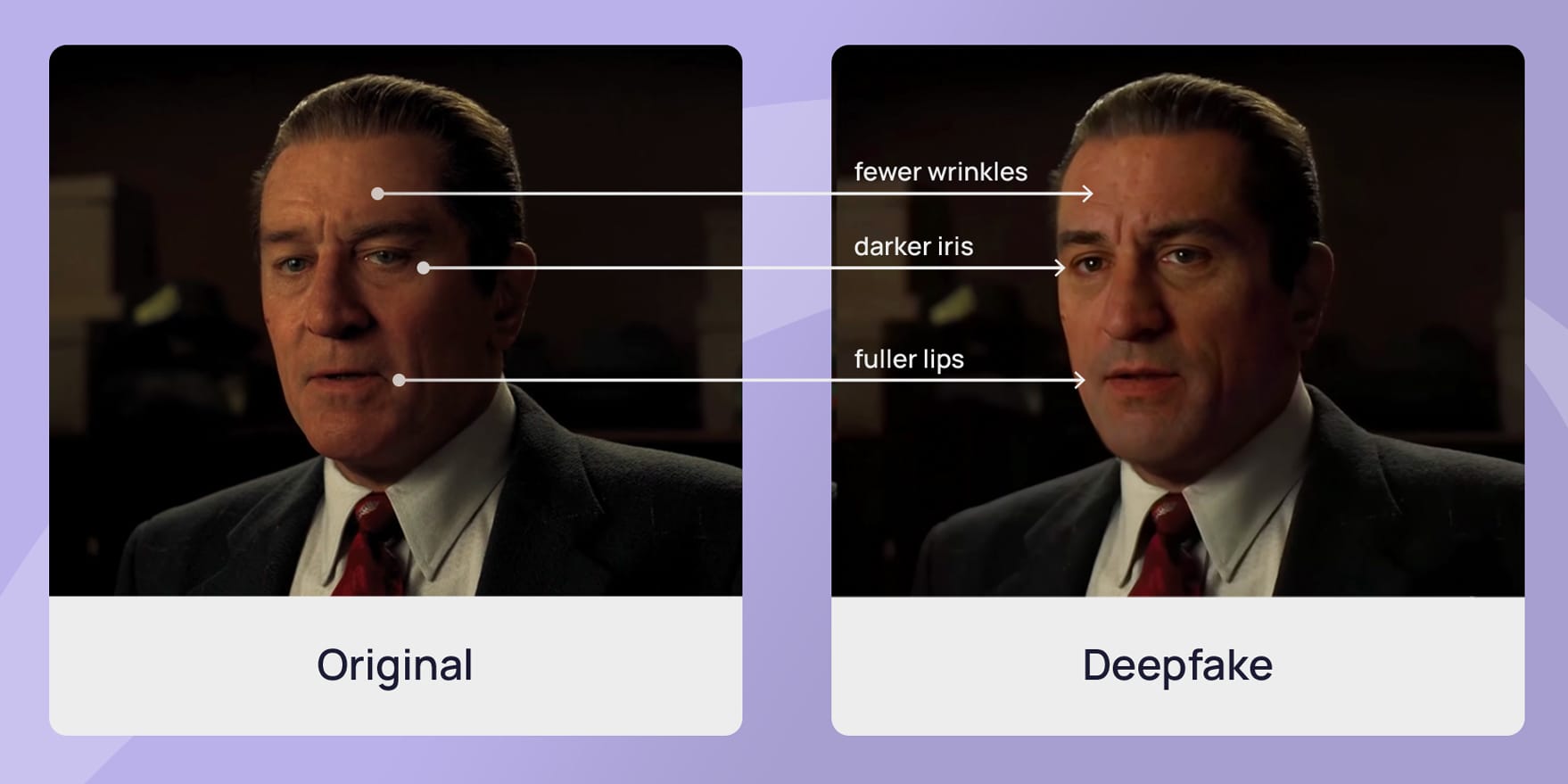

Look for Visual Clues

Deepfake technology isn’t perfect! Often, deepfakes may have slight blurriness, odd blinking patterns, or strange reflections. So, if a person’s face seems “off,” there’s a chance it could be AI-generated.Watch for Sound Issues

Listen carefully—deepfakes sometimes have audio that doesn’t match the visuals, like weird sound sync or unusual voice pitches.Check the Source

If you see a shocking video, look for a credible source that confirms its authenticity. Trusted news outlets often debunk deepfake videos quickly, so it’s worth checking.Use Fact-Checking Tools: Websites like Snopes and FactCheck.org can help verify if a video is genuine or manipulated.

Deepfake Protection Tips

To keep your images or videos safe from potential misuse in deepfakes, consider setting privacy on your social media accounts and avoiding public sharing of sensitive photos or videos. Remember, the more accessible your data, the easier AI can mimic!

Call-to-Action: Double-check your social media privacy settings today to help protect your digital identity.

What Can You Do?

Stay Informed: Understanding how deepfakes work is the first step in recognizing them. Keep up with news about digital manipulation techniques.

Share Knowledge: Educate friends and family about deepfakes and how to spot them. The more people know, the harder it is for misinformation to spread.

Report Misinformation: If you encounter a suspicious video, report it on social media platforms or fact-checking websites.

Stay Alert, Stay Safe!

As we wrap up this discussion on deepfakes during Cybersecurity Awareness Month, remember that Knowledge is power! Share this article with your friends and family so they can stay informed and vigilant against digital deception. Together, we can create a safer online environment!

Deepfakes are getting better, but so are our skills in recognizing them! We protect ourselves and contribute to a more secure online world by staying informed.

By understanding deepfakes and their implications, you’re taking an essential step toward protecting yourself and others from online misinformation. Stay aware, stay safe!

#CyberSecurityAwareness #Deepfakes #DigitalSafety #OnlineSecurity #AI